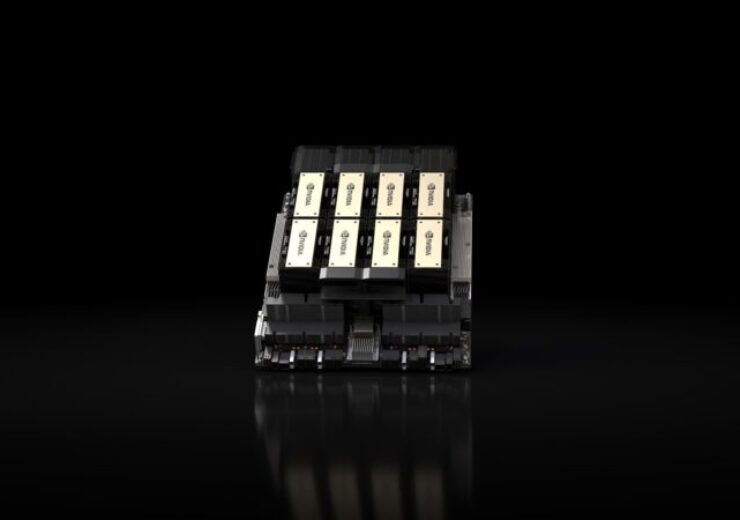

Based on NVIDIA Hopper architecture, the new platform incorporates the NVIDIA H200 Tensor Core GPU with advanced memory, and is expected to manage vast quantities of data for generative AI as well as high-performance computing workloads

Nvidia unveils new artificial intelligence computing platform dubbed HGX H200. (Credit: NVIDIA Corporation)

American technology company Nvidia has unveiled its new artificial intelligence (AI) computing platform HGX H200 in a move to transform AI supercomputing.

The new platform, which is based on NVIDIA Hopper architecture, is equipped with the NVIDIA H200 Tensor Core graphic processing unit (GPU) with advanced memory.

It is expected to manage enormous amounts of data for generative AI as well as high-performance computing workloads.

Nvidia said that the H200 is the first GPU to offer HBM3e, a faster and larger memory to power the acceleration of generative AI and large language models.

Besides, it advances scientific computing for high performance computing (HPC) workloads.

The HBM3e helps H200 to offer 141GB of memory at 4.8 terabytes per second. This represents nearly double the capacity compared to its predecessor, which is the NVIDIA A100.

According to the technology company, HGX H200 provides the highest performance on various application workloads, including large language models (LLM) training and inference for the largest models beyond 175 billion parameters.

The new platform is powered by NVIDIA NVLink and NVSwitch high-speed interconnects.

Google Cloud, Amazon Web Services (AWS), Microsoft Azure, and Oracle Cloud Infrastructure will deploy H200-based instances starting next year along with CoreWeave, Lambda, and Vultr, said Nvidia.

Nvidia hyperscale and HPC vice president Ian Buck said: “To create intelligence with generative AI and HPC applications, vast amounts of data must be efficiently processed at high speed using large, fast GPU memory.

“With NVIDIA H200, the industry’s leading end-to-end AI supercomputing platform just got faster to solve some of the world’s most important challenges.”

The new AI computing platform will be made available by server manufacturers and cloud service providers beginning in Q2 2024.

Last month, Nvidia forged a partnership with Taiwan-based Hon Hai Technology Group (Foxconn) to develop factories and systems for the AI industrial revolution.