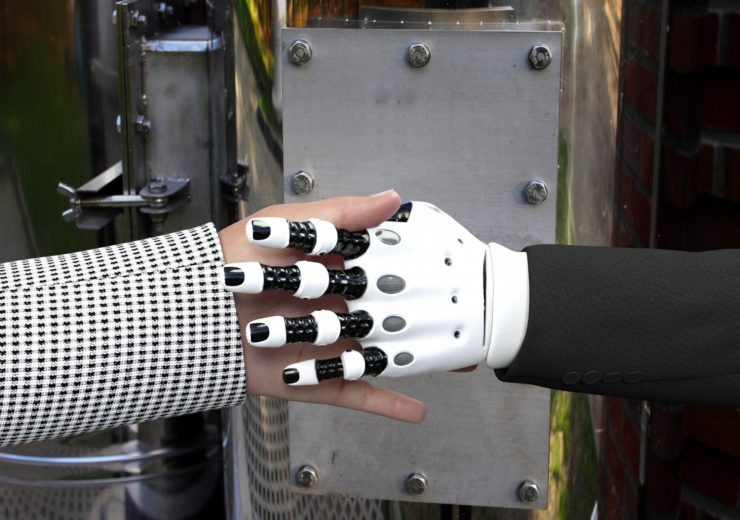

Building trust in your company is a better solution for encouraging the use of AI than increasing understanding, says Ved Sen of Tata Consultancy

Businesses can do more to increase trust in their AI products (Credit: Kai Stachowiak)

As businesses across the board increase investment in AI, Ved Sen, digital evangelist at Tata Consultancy Services, claims there are better ways to build trust among sceptics than resorting to hackneyed explanations of how the technology works.

Many years ago, when my father went shopping for fish in Calcutta, he would follow a simple rule: Either you know your fish, or you know your fishmonger.

And therein lies the distinction between trust and explainability that is at the heart of the AI debate.

AI and algorithms can drive amazing results — from scans and images, or even our sounds and microbial information — to tell us things that are impossible for humans to spot or deduce.

This allows us to fight diseases like cancer and extend life spans. Beyond healthcare, AI can give us better outcomes in diverse areas from saving the rainforests to hiring new staff, and from retail loyalty schemes to drug discovery.

Businesses use AI for automating decisions, such as when considering a home loan application, or medical insurance claim.

On the one side is a consumer or patient for whom the outcome has significant personal and emotional implications and costs.

The business, on the other hand, is trying to take emotion out of the equation by using a tool to make a decision based on an AI learning algorithm.

This poses an immediate problem whereby the black-box model computes an outcome in a way that is not easily interpreted, so the person being rejected for a loan can’t understand why.

Furthermore, it is possible that a learning algorithm evolves, so the computation used for rejecting one candidate and approving the next one, may actually be different. And although it may be “better”, it hardly seems fair.

Is there a need to provide an explanation for the decisions of AI?

As we allow more and more decisions to be made algorithmically, the need for explainable AI has grown.

However, in our everyday lives we interact with complex products and services, from hip surgery, to fund management, hybrid cars, and planes.

In doing so we implicitly trust their capability without any real understanding of how they work. Even when our very lives are at stake.

Going back to my shopping analogy, when buying fresh vegetables or fish, you might check them for freshness. When we have trust, we don’t seek an explanation.

A brand is nothing but the bypassing of user testing for every product we use. You don’t need to test run every pair of Nike shoes you buy, nor do you perform user acceptance test while buying Microsoft Word. We are used to trusting brands in implicit ways.

The importance of trust for AI uptake

Trust is a multi-faceted entity — it implies competence, but also intent.

When we trust a plane with our lives as a passenger, we are counting both on the competence and the intent of the airline.

Whereas when we apply for a loan or an insurance claim, we don’t usually question the competence but rather the organisation’s intent, which is when transparency starts to play a role.

The need for transparency often arises from our lack of trust in the provider.

If we could trust a provider, then we might trust their AI as well.

For example, there is a multitude of different technologies that goes into car production, but we don’t queue up for an explanation of how it works before we drive one.

When we don’t trust a provider, explainable AI becomes a real need — we want to know why we are being excluded from an option or being given an answer which may feel like an opaque judgement.

Trust is built over time and through consistency, but it is often surprisingly resilient.

People may feel differently about you as a brand but they may continue to interact with you and trust you for your services if you work to address problems transparently.

This might be driven by a lack of alternatives, or simply that one mishap does not break a long relationship.

But to truly build the type of trust that would preclude explainability takes years of transparency.

In the light of the recent crashes, Boeing is no doubt aware that it might be a long time before people are willing to implicitly trust its AI tools and Facebook is similarly addressing the lack of faith in Facebook algorithms in light of recent data misuses.

So, what does that mean for explainable AI?

If you were flying onboard an autonomous plane, you would absolutely need to trust the AI but you probably wouldn’t need, or want, to understand exactly how that AI works.

However, from the perspective of the provider, a high level of explainability would be essential.

You would want replicability of decisions, simulation of a vast number of situations and a near-guarantee that the AI will work in both predictable and safe ways.

As algorithms evolve and learning systems become more sophisticated, as businesses, we must be able to track their progress, provenance and decision flows.

We also need to guard against the spectre of rogue AI, no matter how vague it seems and the perils of biases in data and training.

Explainable AI is a feature that organisations need to integrate so that in case of an adverse event or a questionable outcome, our experts can decipher the rationale and conclude if the AI has ‘gone rogue’, made a mistake, or simply jumped ahead of the game.

What consumers need is trustable AI, and that means AI that comes from trustable organisations.